Web applications were originally developed around a client/server model, where the Web client is always the initiator of transactions, requesting data from the server. Thus, there was no mechanism for the server to independently send or push data to the client without the client first making a request.

To overcome this deficiency, Web app developers can implement a technique called HTTP long polling, where the client polls the server requesting new information. The server holds the request open until new data is available. Once available, the server responds and sends the new information. When the client receives the new information, it immediately sends another request, and the operation is repeated.

What is HTTP Long Polling?

So, what is long polling? HTTP Long Polling is a variation of standard polling that emulates a server pushing messages to a client (or browser) efficiently.

Long polling was one of the first techniques developed to allow a server to ‘push’ data to a client and because of its longevity, it has near-ubiquitous support in all browsers and web technologies. Even in an era with protocols specifically designed for persistent bidirectional communication (such as WebSockets), the ability to long poll still has a place as a fallback mechanism that will work everywhere.

How does HTTP Long Polling work?

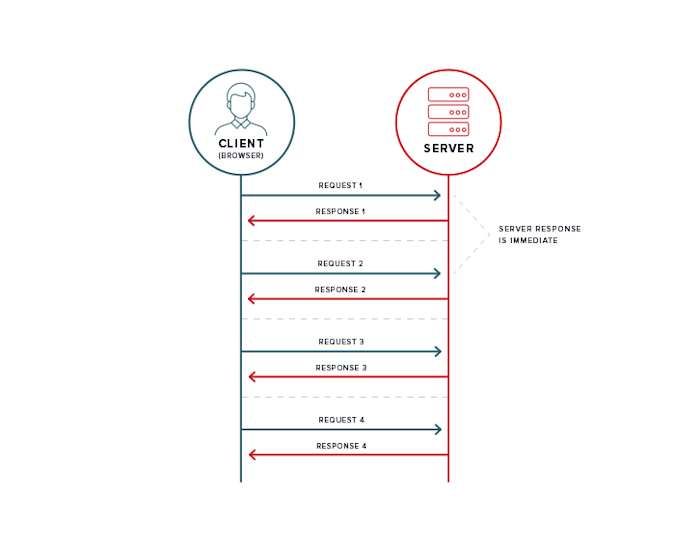

To understand long-polling, first, consider standard polling with HTTP.

“Standard” HTTP Polling

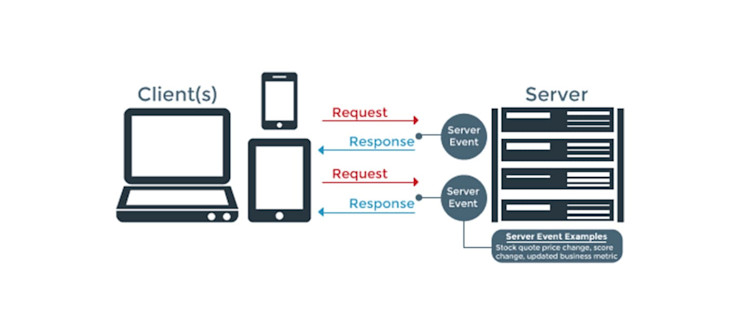

HTTP Polling consists of a client, for example, a web browser, continually asking a server for updates.

One use case would be a user who wants to follow along with a rapidly developing news story. In the user’s browser, they have loaded the story’s web page and expect that page to update as the news story unfolds. One way to achieve this would be for the browser to repeatedly ask the news server “are there any updates to the story”, the server would then respond with the updates or would give an empty response if there were no updates. The rate at which the browser asks for updates determines how frequently the news page updates - too much time between updates will mean important updates are delayed. Too short a time between updates means there will be lots of ‘no update’ responses, leading to wasted resources and inefficiencies.

Above: HTTP Polling between a web browser and server. The server makes repeated requests to the server whose response is immediate.

There are downsides to this “standard” HTTP polling:

There is no perfect time between requests for updates. Requests will always be either too frequent (and inefficient) or too slow (and updates will take longer than required).

As you scale, and the number of clients increases, the number of requests to the server also increases. This potentially becomes inefficient and wasteful as resources are used without purpose.

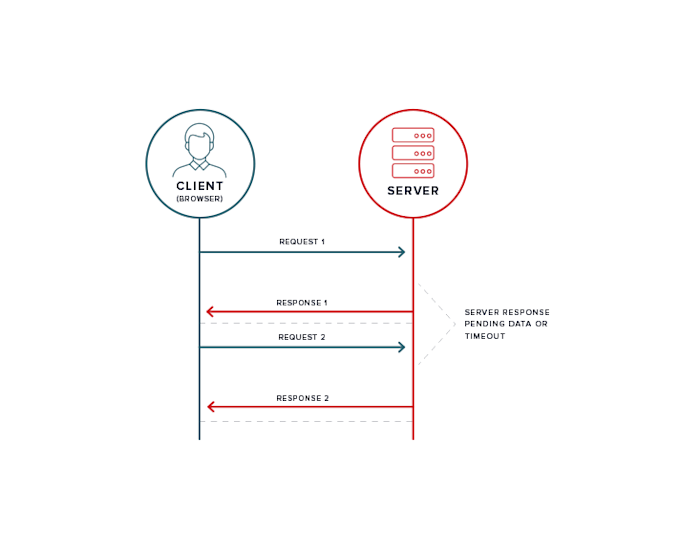

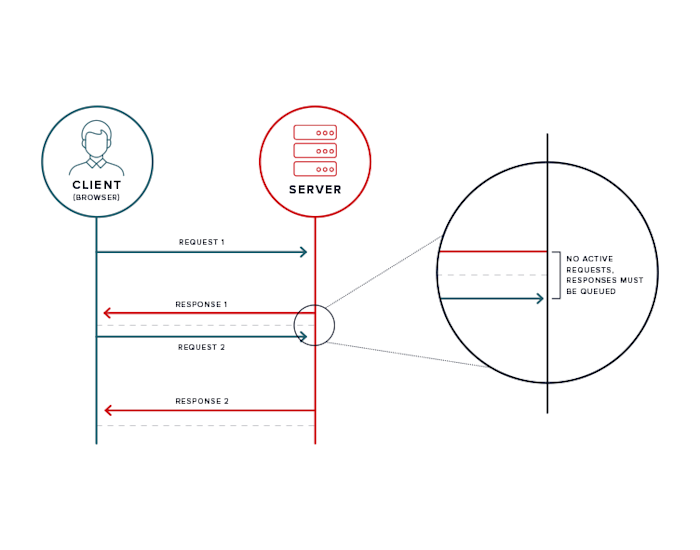

HTTP Long Polling addresses the downsides of polling with HTTP

Requests are sent to the server from the browser, like before

The server does not close the connection, instead, the connection is kept open until there is data for the server to send

The client waits for a response from the server.

When data is available, the server sends it to the client

The client immediately makes another HTTP Long-polling request to the server

Above: HTTP Long Polling between a client and server. Note that there is a long time between request and response as the server waits until there is data to send.

This is much more efficient than regular polling.

The browser will always receive the latest update when it is available

The server is not inundated with requests that will never be fulfilled.

How long is a long poll?

In the real world, any client connection to a server will eventually time out. How long the server will hold the connection open before it responds will depend on several factors: The server protocol implementation, server architecture, the client headers and implementation (in particular the HTTP Keep-Alive header), and any libraries that are used to initiate and maintain the connection.

Of course, any number of external factors can also affect the connection, for example, a mobile browser is more likely to temporarily drop the connection as it switches between a WiFi and cellular connection.

In general, there is no single poll duration unless you can control the entire architecture stack. Where PubNub uses long polling, the duration is set to 280 seconds, however outside of PubNub, you might see long polls lasting anywhere from 100 to 300 seconds.

Talk to an Expert

Let's connect to discuss your real-time project.

Considerations when using Long Polling

There are a couple of things to consider when using HTTP long polling to build real-time interactivity in your application, both in terms of developing and operations/scaling.

As usage grows, how will you orchestrate your real-time backend?

When mobile devices rapidly switch between WiFi and cellular networks or lose connections, and the IP address changes, does long polling automatically re-establish connections?

With long polling, can you manage the message queue and catch up on missed messages?

Does long polling provide load balancing or failover support across multiple servers?

When building a real-time application with HTTP long polling for server push, you’ll have to develop your own communication management system. This means that you’ll be responsible for updating, maintaining, and scaling your backend infrastructure.

Server performance and scaling

Every client that uses your solution will initiate a connection to your server at least every 5 minutes and your server will need to allocate resources to manage that connection until it is ready to fulfill the client’s request. Once fulfilled, the client will immediately re-initiate the connection meaning in practice, the server will need to be able to permanently allocate some proportion of its resources to service that client. As your solution grows beyond the capabilities of a single server and you introduce load balancing you need to consider session state - how do you share client state amongst your servers? How do you cope with mobile clients connecting with different IP addresses? How do you handle potential denial of service attacks?

None of these scaling challenges are unique to HTTP long polling but they can be exacerbated by the design of the protocol - for example, how do you differentiate between multiple clients making multiple genuine contiguous requests and a denial-of-service attack?

Message ordering and queueing

There will always be a small time between the server sending data to the client and the client initiating a polling request where data might be lost.

Any data the server wants to send to the client in this period needs to be cached and delivered to the client on the next request.

Several obvious questions then arise:

How long should the server cache or queue the data?

How should failed client connections be handled?

How does the server know that the same client is re-connecting, vs. a new client?

If the reconnection took a long time, how does the client request data that falls outside of the cache window?

All of these questions need to be answered by an HTTP long polling solution.

Device and network support

As mentioned earlier, since HTTP long polling has been around for a long time, it enjoys near-ubiquitous support amongst browsers, servers, and other network infrastructure (switches, routers, proxies, firewalls). This level of support means long polling is a great fallback mechanism, even for solutions that rely on more modern protocols such as WebSockets.

WebSocket implementations, especially early implementations, have been known to struggle with double NAT and certain proxy environments where HTTP long polling works well.

Does PubNub use HTTP Long Polling?

PubNub is protocol agnostic, so yes, and no. This support article gives some great background but to summarize, PubNub uses a combination of protocols so from a client’s point of view messages are exchanged using the most efficient and reliable mechanism possible without unnecessary configuration.

Key Benefits of Protocol Agnostic Real-time Messaging

Instead of relying solely on HTTP long polling for real-time messaging, a protocol agnostic approach is beneficial. PubNub automatically chooses the best protocols and frameworks depending on environment, latency, etc. Any server or client code wanting to communicate makes a single API call to publish or subscribe to data channels.

The code is identical between clients and servers, making implementation much simpler than using HTTP long polling. In terms of connection management, the network handles network redundancy, routing topology, loading balancing, failover, and channel access.

Additionally, PubNub offers core building blocks for building real-time into your application:

Presence – Detects when users enter/leave your app and whether machines are online, for applications like in-app chat.

Storage & Playback – Store real-time message streams for future retrieval and playback.

Mobile Push Gateway – Manage the complexities of real-time apps on mobile devices, including mobile push notifications.

Access Management – Fine grain Publish and Subscribe permissions down to person, device or channel, for HIPAA-compliant chat applications or other sensitive use cases.

Security – Secure all communications with enterprise-grade encryption standards.

Analytics – Leverage visualizations into your real-time data streams.

Data Sync – Sync application state across clients in real time, for digital classrooms and multiplayer game development.

Sign up for a free trial to learn more about building with PubNub.

Talk to an Expert

Let's connect to discuss your real-time project.