What is Modern Tech Stack? Technology Infrastructure

What is Technology Stack?

A technology stack, often referred to as a tech stack defines the ecosystem enabling the development, deployment, and maintenance of software. It is the combination of software programming languages, frameworks, libraries, tools used to build and run an app or project. It includes both the front-end (client-side) and back-end (server-side) components, as well as databases, infrastructure, and development tools.

Components of a Technology Stack:

Front-end (user interface) e.g., React, Angular

Back-end (server-side frameworks) like Express.js, Django

Databases like MySQL, MongoDB

Infrastructure (Dev-Ops) e.g., AWS, Docker, GCP

Development tools (planning, processing, QA) like: VS Code, Git

Frontend (Client-Side & UI):

Languages These are the core programming languages that run in the browser, such as HTML, CSS, and JavaScript.

Frameworks/Libraries Tools like React, Angular, or Vue.js that provide structure and reusable components to enhance development efficiency.

Build Tools Webpack, Babel, and other tools used to compile, bundle, and optimize frontend assets.

Backend (Server-Side):

Languages Server-side programming languages such as Python, Java, Ruby, JavaScript (Node.js), or PHP that handle the business logic, database interactions, and server-side processing.

Frameworks Backend frameworks like Django, Spring, Express.js, or Ruby on Rails that provide a foundation for building server-side applications by offering built-in functionalities like routing, middleware, and database ORM (Object-Relational Mapping).

APIs Tools and protocols like REST or GraphQL that allow the frontend to communicate with the backend.

Database:

Relational Databases Systems like MySQL, PostgreSQL, or Oracle that use structured query language (SQL) to manage data stored in tables with defined relationships.

NoSQL Databases Databases like MongoDB, Cassandra, or Redis that store unstructured or semi-structured data, often in a key-value, document, or graph format.

DevOps/Infrastructure:

Servers Physical or cloud-based servers (e.g., AWS EC2, Google Cloud, Azure) where the application runs.

Containers Docker or Kubernetes, which package an application and its dependencies into a standardized unit for consistent development and deployment.

CI/CD Continuous Integration/Continuous Deployment tools like Jenkins, CircleCI, or GitLab CI that automate testing, building, and deploying applications.

Version Control Git, used with platforms like GitHub, GitLab, or Bitbucket to track code changes and collaborate with other developers.

Development Tools (QA Testing):

Unit Testing Frameworks like Jest, JUnit, or Mocha that allow developers to write tests for individual units of code.

Integration Testing Ensures that different components or systems work together, using tools like Selenium, Postman, or Cypress.

End-to-End Testing Testing the complete workflow of an application, often using tools like Cypress, Puppeteer, or TestCafe.

Visual Studio Code (VS Code) A lightweight, powerful code editor with extensive extensions and language support.

Git version control system for tracking changes in code and collaborating with teams.

Monitoring and Logging:

Monitoring Software like Illuminate, Prometheus, Grafana, or New Relic that track the health, performance, and uptime of applications.

Logging Tools like Logstash, ELK Stack (Elasticsearch, Logstash, Kibana), or Splunk that collect and analyze log data from applications.

Managing Data Connections Between Peers

Building a modern application that communicates between users is fairly easy. However, expanding the realtime app to connect multiple users across the globe is more challenging. You must maintain a reliable and secure “always-on” connection between peers.

Scaling with a Modern Tech Stack is Key

Scalability is a challenge you should be thinking about from the beginning. You’ve built an amazing app, but when the user base grows beyond your expectations, you must ensure that the modern tech stack can handle an increased load of users and data. How can you best prepare for this?

Talk to an Expert

Let's connect to discuss your real-time project.

Real-time with a Modern Tech Stack

A real-time application performs an action against an event, within a fraction of a second. However, the key indicator for real-time performance is the collaboration between apps, such that an event occurring in one app can trigger an action in another.

Use Cases for Modern Tech Stacks

RealTime Chat: Two users are connected in a mobile chat app. A chat message keyed in by one user is an event that triggers an action to display the message in the other user’s chat app.

Live Data Dashboard: A user monitors a device's state through a dashboard. A change in the device's parameter is an event that triggers an update. The value displayed in the data dashboard is updated in realtime.

Geolocation: A bus sends its location to a passenger’s mobile device. A change in the location of the bus is an event that triggers a change in the location of the bus icon displayed on the map on the passenger’s mobile device.

Building a real-time app with a modern tech stack

Let’s dive into the development process of building a real-time app. A simple in-app chat feature is a great showcase. In its simplest form, two users can chat in real time via a chat interface.

In this case, you would develop the app on a standard TCP/IP stack, and both users will use their individual app instances to communicate over the Internet.

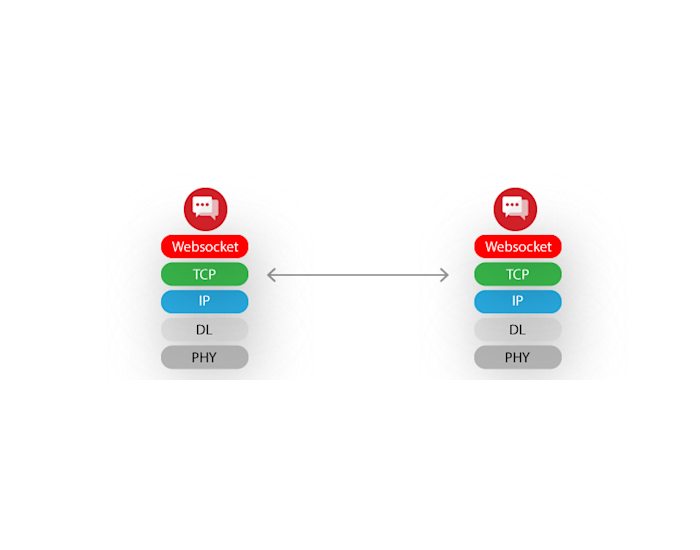

What about the protocol stack for this app?

Since the app instances talk to each other directly, WebSocket is likely the choice here. WebSocket works on the application layer and provides full duplex communication over TCP.

Note

Socket.IO is one of the most popular libraries for implementing WebSockets. Although all the major browsers now support the WebSocket interface natively, Socket.IO provides a wrapper that provides additional features on top of a WebSocket connection, such as a fallback and acknowledgment mechanism.

Does the app possess real-time communication capabilities? Perhaps yes, because the app instances have a direct TCP-based connection. However, if the geographical separation between the two app users is large, the real-time performance will deteriorate.

This is not the best way to build any modern chat app since a little bit of scale will progressively make the inter-app communication complex. The impending bottleneck is too many connections to handle for each app.

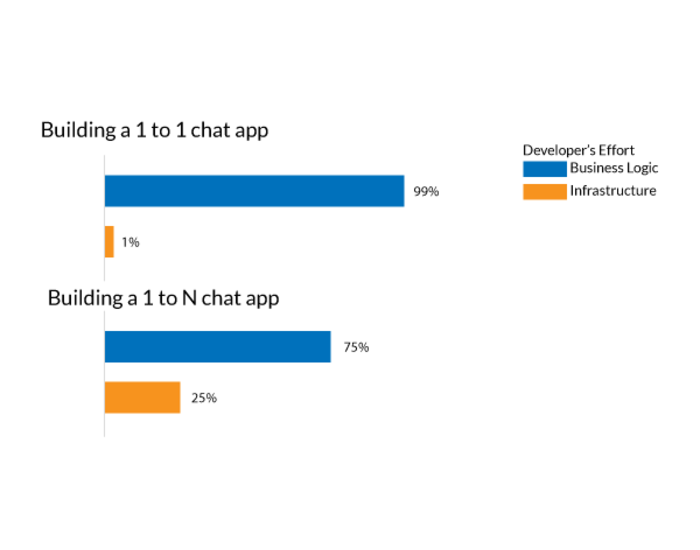

A Developer’s Dilemma With a Modern Data Stack

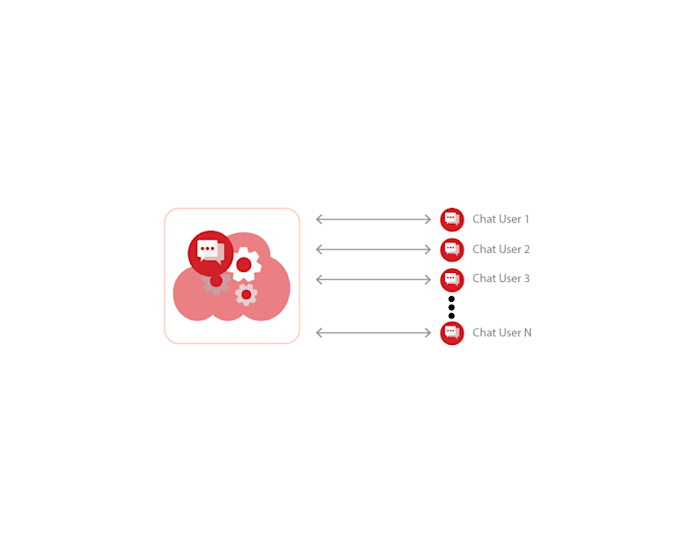

Building a 1:1 or 1:N chat app is easy if you are a seasoned programmer. However, in the case of 1:N chat app, a significant amount of time must be spent managing the connections between the app instances. As a developer, you will experience some frustrating moments when you can’t focus on the app and UI/UX features just because the connection logic isn’t working right.

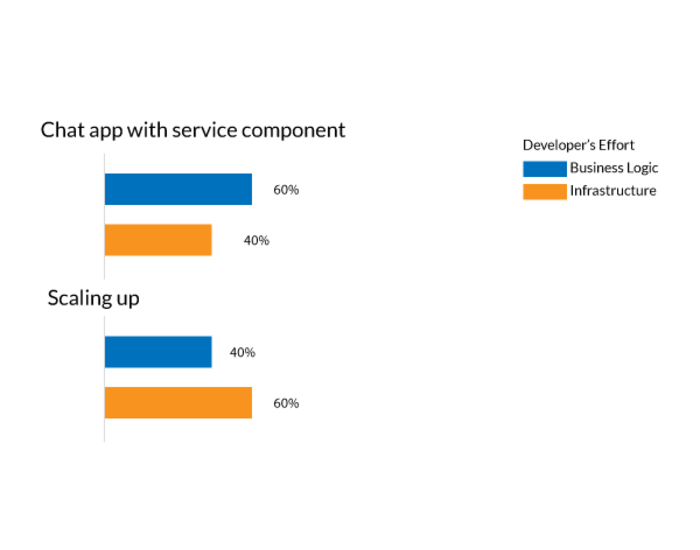

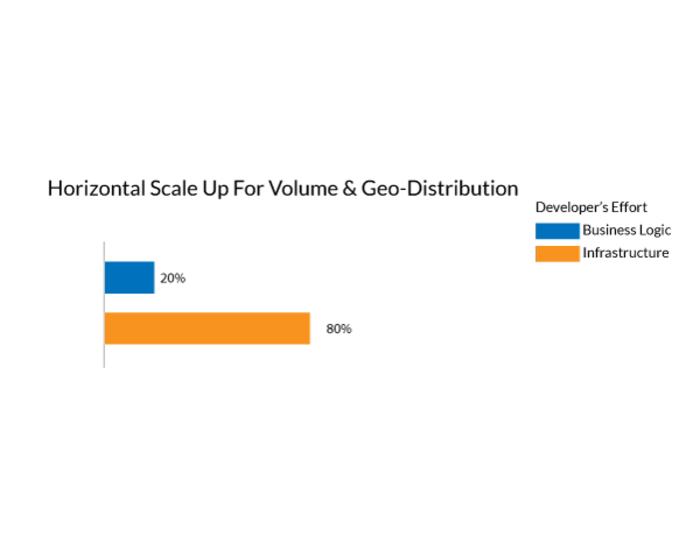

In this case, the app and UI/UX features constitute the app's business logic. The connection management is part of the modern application infrastructure. In terms of the percentage of time spent developing both these components, there is a significant jump in the latter while upgrading the app for 1:N chat.

Using Hosted Services as Part of Your Tech Stack

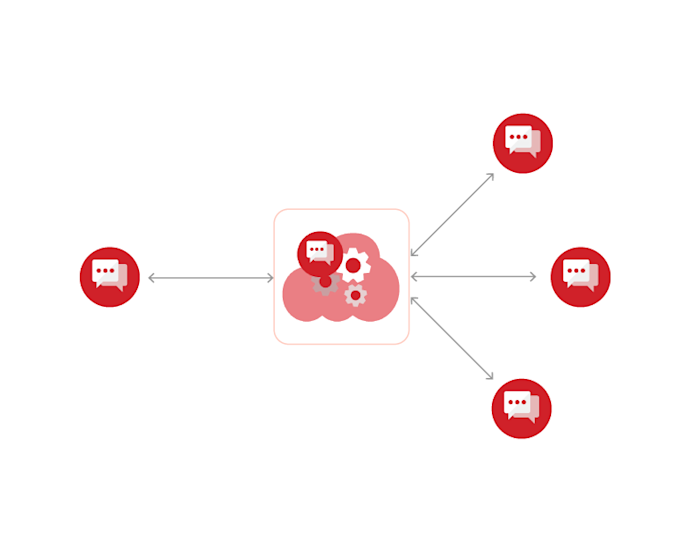

To scale effectively, use a modern architecture where each chat app instance handles just one connection, regardless of users. This is achieved by introducing a centralized chat service component.

With this arrangement, the chat client is simplified. However, the chat service component must now handle and orchestrate all messages between all the chat app instances.

The protocol stack can remain the same across all components, but since app instances no longer connect directly, the chat service must maintain active connections with each instance, quickly relaying messages for real-time performance.

Vertical and Horizontal Scaling Data Architecture

With the revised data architecture, it can be expected that each chat service component plays the role of a chat room hosting multiple chat users via their chat app instances.

Vertical Scaling Architecture

The handling of scale concerning the number of chat users is now the sole responsibility of the chat service component. Imagine a public chat room where hundreds of users can join or leave at a given moment. This can lead to a significant latency in exchanging messages via the online chat service components.

As the chat service scales vertically, more CPU and memory resources are needed to support additional users, but this has a limit. Although connection management at the client level is simplified, the service component faces challenges as more users join, including managing connectivity, state, and status. Over time, focus will shift from business logic to ensuring the reliability and resilience of the infrastructure.

Horizontal Data Scaling

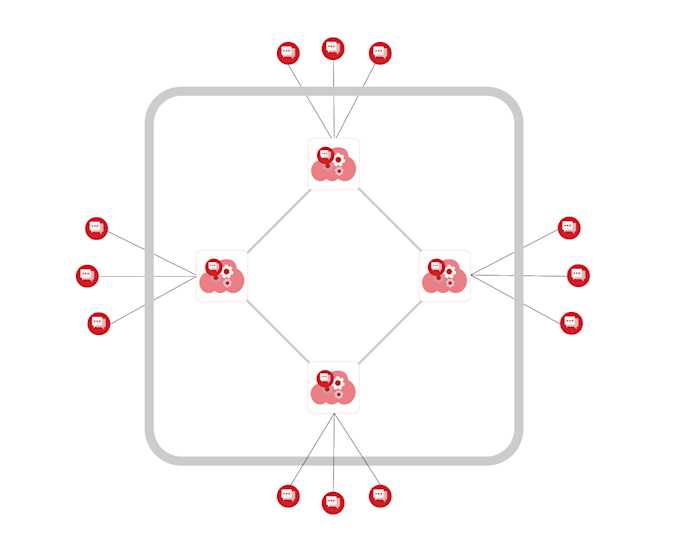

The other scalability challenge is the handling of geographically diverse locations from where the users join in.

Realtime performance of the app is impacted if the geographical expanse is not considered. To counter this, multiple chat service components need to be spawned at various geographical locations to aggregate the users for a location. This leads to what we know as horizontal scaling.

At this stage, the chat service architecture needs to handle users from multiple regions and coordinate with other components, making it more challenging for developers.

The chat service is now distributed across multiple locations, with each message replicated at every site. This creates an inner partition for orchestrating service components and an outer partition for managing connectivity with chat app instances.

This brings additional complexity because the chat service components have to deal with multifarious messages ranging from users’ chat messages to state synchronization between all chat service component instances.

Note

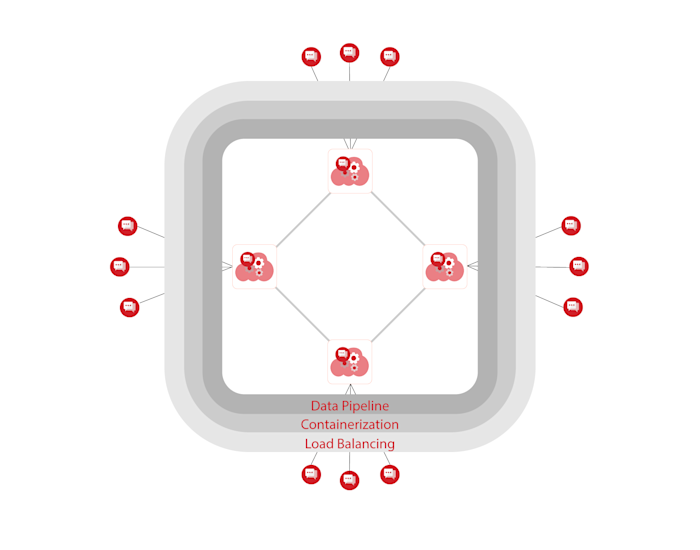

Handling diverse messages at a scale requires an effective data processing pipeline, which provides a queuing mechanism to process messages. There are several message queue libraries available to handle this scenario. Kafka is a popular platform for building a real-time data pipeline for consuming messages.

Managing the digital states and world views across all app components

As the chat service scales horizontally, maintaining a uniform state, such as user online status, across all instances becomes a huge challenge. Previously, a single component managed this state, but now it must be synchronized across multiple components.

Managing the dynamic allocation of chat service components based on regional volumes

To build a scalable system, consider the load on service components. If too many users log in from one location, a single component may not handle the scale, and it creates a single point of failure. Horizontal scaling is needed to avoid these issues.

To mitigate these challenges, engineers must implement a load balancer at each location to spread the chat traffic across multiple chat service components. Above all, a set of protocols must be defined to spawn these chat service components dynamically based on traffic surges. Additionally, they should sync up with the existing components and be part of the data synchronization.

Note

When deploying an application for scale across multiple geolocations, it is more about elasticity than scalability. Based on the user volume, the application should be able to scale up or down to handle the traffic with the help of the requisite amount of computing resources. That’s where containerization technology provides a lot of flexibility. A container can be deployed as a standalone execution context within a host operating system/server with its isolated file system and resources.

Docker is the most popular container technology available today. A Docker container can be pre-built as an image and executed within a host OS as an independent system with its pre-allocated computing and memory resources.

For managing Docker-based elastic application deployment, there is also a need for a container management system that provides an application-wide view of all the containers. Kubernetes is one such tool that is used for this purpose. Together with Docker, Kubernetes provides a complete suite of tools for managing and administrating Docker containers.

With the additional complexity around horizontal scalability, there is a need for additional layers of architectural components to manage the service components over and above the application stack.

Security and access control of your modern tech stack

At a global scale, the issues of security and access control become paramount. Forbidding unauthorized users, denying access to hackers, or even avoiding DDoS attacks by rogue users is necessary. Security is a separate subject, and for such a vast network, a specialized layer of security protocols must reside at the network's outer edge to thwart any possible breach. It all adds to the inner partition's complexity and the chat service components.

If your application has come this far, then, needless to say, it is popular and used by many users worldwide. Just like the saying goes in software engineering: 80% of code is written to check and handle errors, while only 20% of code does the real job. The same logic applies to handling super scalability as well.

Infrastructure costs

Let's talk about cost – for both setting up and maintaining the platform infrastructure at scale.

If you include the costs and the developer’s efforts in managing your app's real-time infrastructure, the situation can get out of hand rather quickly. All this is at the expense of the user experience of your web app since the developers would be busy tuning the infrastructure for real-time performance.

That’s where a specialized cloud service provider can be a boon that handles the challenges of real-time scaling and expansion.

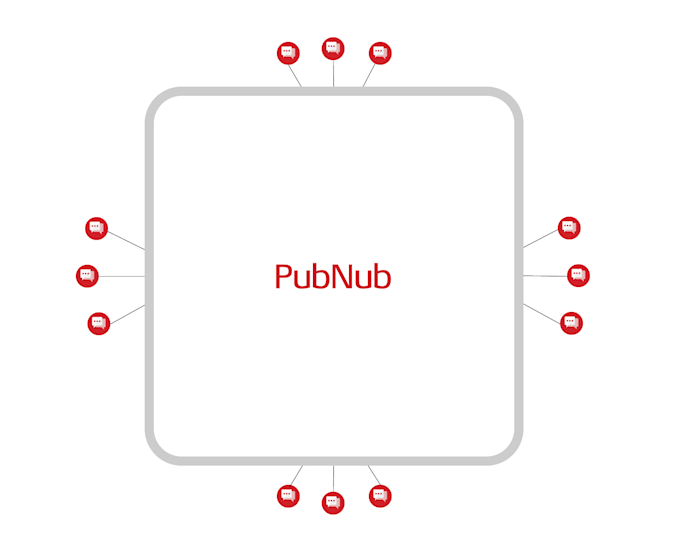

PubNub’s real-time data streaming service solves every problem developers and IT teams face in scaling up a real-time application, both in terms of time and cost. With PubNub, your app’s real-time infrastructure is as simple as this.

Apart from handling all the heavy lifting associated with handling scale, PubNub guarantees 99.999% of data transmission reaches the recipient within a tenth of a second.

The most noteworthy feature of PubNub is that it is not entirely a black box system. PubNub’s infrastructure is like a semi-porous white box that allows you to plug in realtime message-handling components. Also known as Functions, these components allow developers to build their custom real-time data processing pipelines on the go. Consequently, PubNub can process data in motion rather than at rest, which has a significant upside for real-time data transfer.

Backed by a globally distributed data streaming network, PubNub handles all your chores related to infrastructure, from scaling to security, and more.

Yes, PubNub now becomes the de-facto application layer protocol responsible for transmitting your data packets from one app instance to another. Now, you do not have to worry about the infrastructure. You only worry about building that all enticing UX for your end users and just plug in the relevant PubNub SDK at your client code to take care of all things in real time.

Getting started with your modern tech stack

Using a service like PubNub lets you focus on improving your app’s user experience, while it handles the infrastructure. As you add more real-time features, user satisfaction increases. The effort split remains constant, with PubNub seamlessly supporting your app regardless of user count.

Our experts are standing by to chat about your realtime app projects. Or, if kicking the tires is more your speed, sign up for a free trial, read through our docs, or check out our GitHub.