Until very recently, if you wanted to build a chatbot, you would use “natural language processing” (NLP) to understand and interpret what the user was asking, then attempt to fulfill that request using some backend logic, potentially integrated with a proprietary service. Solutions such as Dialogflow or Microsoft Bot framework provide conversational AI based on machine learning and have traditionally been popular with developers creating chatbots.

OpenAI and OpenAI’s ChatGPT make it easier than ever to create an AI chatbot powered by artificial intelligence. Since the large language model (LLM) which powers OpenAI is based on a public dataset, you can easily enhance the user experience of your app by providing any information you would reasonably expect to exist in a public knowledge base.

PubNub powers real-time experiences for a huge range of industries and use cases, including customer support and in-app chat. All these real-time experiences are powered by data, either user-readable messages or metadata about your solution - wouldn’t it be great to take advantage of that existing data to power a chatbot?

Some real-world example use cases:

Provide answers to commonly asked customer support questions

Augment your existing data with additional content, for example suggesting places to eat near a provided location

Provide summaries of conversations based on the message history.

We published an article on this topic previously, and whilst that older article is still correct, the industry is moving very fast, and a few months is a long time.

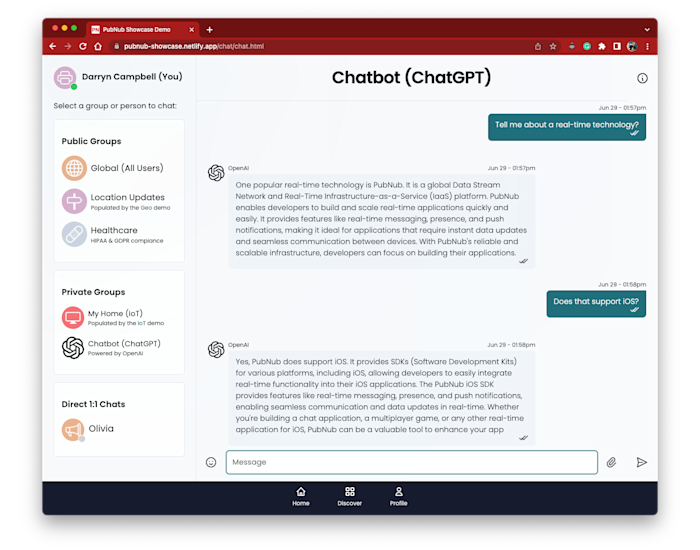

This article will discuss how to build a chatbot powered by OpenAI similar to the one you can see running within our PubNub showcase application, shown below.

High-Level Architecture

To create a chatbot like ChatGPT, you will need to use the OpenAI Chat API and sign up for an account to create an API key. The OpenAI API is accessible as a REST endpoint, so it can be called from pretty much any programming language, although most of their provided examples are written in Python.

The best way to extract data from PubNub, to initiate or continue a conversation with the chatbot is to use Functions. Functions provide a serverless JavaScript container which runs in Node.JS and allows you to execute business logic whenever an event occurs on the PubNub network, such as receiving a chat message. PubNub Functions are frequently used for real-time on-the-fly language translation, profanity filtering, or to invoke a 3rd party service like OpenAI.

This example will work as follows:

The existing chat application is using PubNub to send and receive real-time messages.

A PubNub Function will listen for messages sent to a specific channel.

On its own, a single message does not provide any context to ChatGPT, so a portion of the conversation history will also be cached to allow an ongoing dialogue.

The PubNub function will invoke the OpenAI API and retrieve the response

The response will be published to the PubNub channel associated with your own chatbot

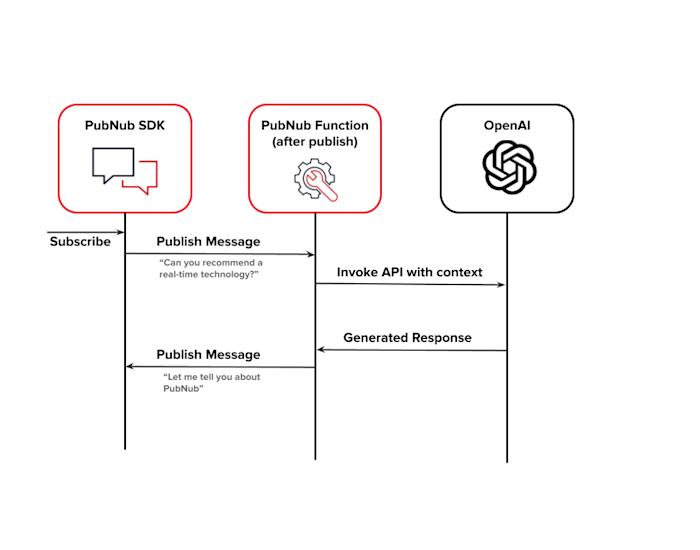

You can see this in the sequence diagram below:

The user types a message in your chat app. With 50+ SDKs, PubNub is able to support the vast majority of applications, and the message will be published to the channel representing the chatbot on the PubNub network.

A PubNub function is configured to be invoked after a publish event occurs on a channel. When a message is received, the channel history is retrieved to provide some context to the conversation, and this history, along with the user input and some additional context, is provided to ChatGPT through the Chat Completion API.

The ChatGPT API will generate responses suited to the conversation flow

Within the PubNub function, a response is received from ChatGPT, which is parsed and published back into the PubNub network.

The application, having previously subscribed to receive messages from PubNub, will display the chatbot’s response.

Configuring your PubNub Function

Create your PubNub function as follows:

Prerequisites: If you are new to PubNub, follow our documentation to set up your account or visit our tour to understand what PubNub is all about.

Navigate to the admin dashboard

Select

functionsfrom the left-hand menu, and select the appropriate keyset where you want to create the function.Select

+ Create New Module.Enter a Module Name and Module Description, select a Keyset, and then hit

CreateSelect the Module you just created

Select

+ Create New FunctionGive the function a name and select the event type

After Publish or Fire. This function will be invoked AFTER a message has been submitted to PubNub. Functions can be synchronous or asynchronous, and in this case, we just capture a copy of the message to send to OpenAI. You can find more details on the different types of functions described in our documentationThe function channel should match the channel where you are publishing messages you want to send to OpenAI. You can use a wildcard here, so, for example,

chatgpt.*will match any channel that starts chatgpt. Since we want this function to talk to multiple people at the same time, a wildcard allows us to separate which user is involved in the active conversation so that we can respond on the appropriate channel. Where multiple people are talking to OpenAI simultaneously, PubNub takes care of creating multiple instances of your function. For example,chatgpt.daveandchatgpt.simonare two different channels, but both will invoke the PubNub function listening onchatgpt.*. How PubNub handles channel wildcards is explained in more detail in our documentation.Select

My Secretsand create a new secret for the OpenAI API key; the code below assumes this secret is calledOPENAI_API_KEY.

Here is an example PubNub function that will invoke OpenAI / ChatGPT

Practical Considerations when Calling the OpenAI API

Chat Completion API Properties

The code featured in this article uses the OpenAI Chat Completion API, but it is more configurable than is shown above.

Specifying stream to be true will return the OpenAI response as a data stream over server-sent events. In practical terms, this can reduce the perceived latency since the user will see a more immediate response, similar to how the ChatGPT web interface works.

model allows you to specify the language model used to generate the response. The API is powered by a family of models with different capabilities and price points, with models being upgraded and new models being added frequently.

max_tokens restricts the number of tokens the API will use to generate output. In general, the smaller the number of tokens, the less complex the output and the less context is kept track of, but responses will be provided quicker.

Message role allows you to specify the author of a message. The example in this article uses system to provide some instructions to OpenAPI, user for messages sent by the end user, and assistant for responses previously provided by OpenAI.

The model can be further tweaked by specifying the temperature / top_p, frequency_penality, and presence_penalty. Please see the OpenAPI documentation for more information about these.

The API also allows you to define and specify custom functions that augment the capabilities of the model, which is outside the scope of this blog. If you would like to learn more, we have a separate post that illustrates how you can allow ChatGPT to publish to PubNub on your behalf, enabling you to say something like, “Send a message to the main channel telling them that I'll be ready in 5 minutes.” You could also allow ChatGPT to invoke your own business API, providing, for example, product information or order status information directly to the customer.

Providing Context to Chat

HTTP requests are stateless, so to engage in a long-running conversation with OpenAI, there needs to be a way to give some context to the API, describe the conversation so far, and to provide metadata around that conversation.

The Chat Completion API accepts an array of messages, which allow the AI model to derive meaning from what otherwise might be an ambiguous question. Consider this example:

> [User] How big is the earth

> [OpenAI] The earth has a diameter of approximately 12,742 kilometers

> [User] I meant how heavy is it?

> [OpenAI] The Earth has a mass of approximately 5.97x10^24 kg

Clearly, to answer the question “How heavy is it” the AI needed to understand what “it” was.

As you build your PubNub powered chatbot, you will need to decide how much conversation context should be provided. If you are using PubNub functions, you have access to the entire message history for the channel, and the example provides the previous 10 messages in the conversation. You could of course provide a lot more if required, depending on your needs:

Having the chat context already within PubNub makes it trivial to provide that context to OpenAI. Note that the role will differ depending on whether the message was sent to OpenAI or received from OpenAI.

Long-Running Queries

As you develop your ChatGPT-like chatbot and configure the parameters used by your AI model, consider the expected response time to your query. By default, PubNub functions offer a maximum runtime of 10 seconds per invocation, which can be increased by contacting support. In general, a chatbot that takes too long to respond to a user’s question will be perceived negatively by the user, so it is good practice to dial in the parameters of your model to achieve a balance between responsiveness and capabilities, as mentioned earlier.

Some use cases for OpenAI can expect responses measured in minutes rather than seconds. If you are integrating OpenAI into your PubNub solution and expect the duration of the response to exceed the default Function runtime, please get in touch with us, and we can provide recommendations and advice.

In Summary

Using a combination of both PubNub and OpenAI / ChatGPT, it is possible to quickly create immensely powerful real-time solutions to add AI and chat to any online experience.

When you are ready to get started, visit our admin dashboard to sign up for a free set of PubNub keys. We have a number of tutorials and demos covering every industry, or check out our PubNub showcase (also available on Github) to see an example chatbot powered by OpenAI.